Now that we’re done with the simple stuff, let’s jump to the next level: meshes.

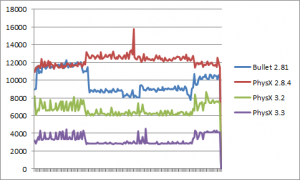

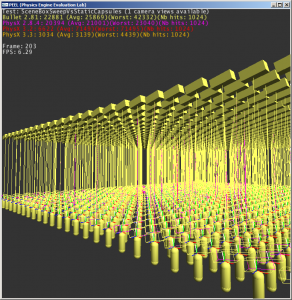

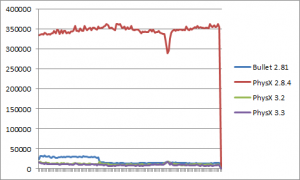

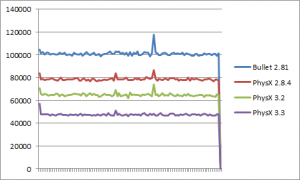

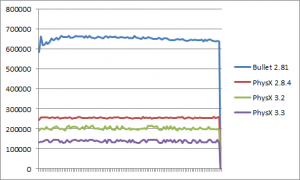

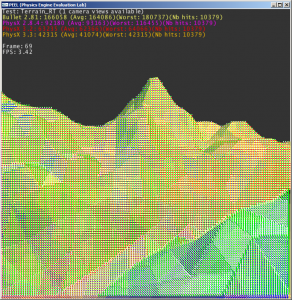

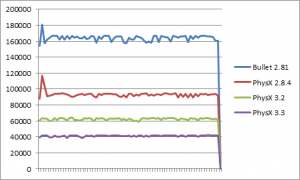

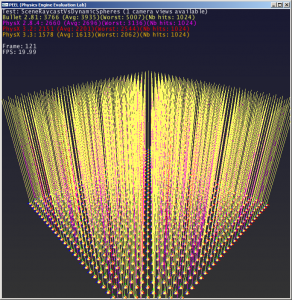

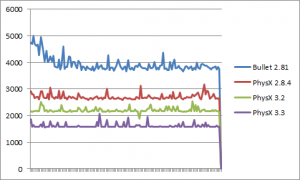

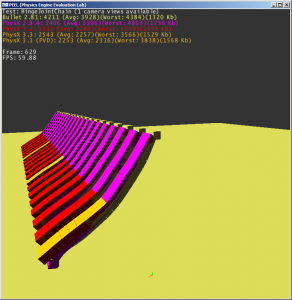

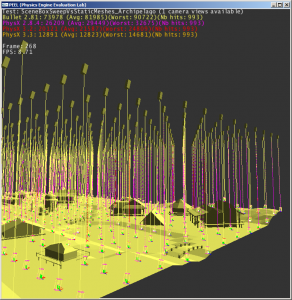

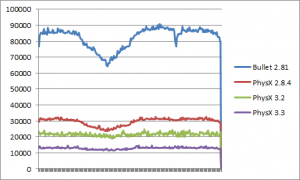

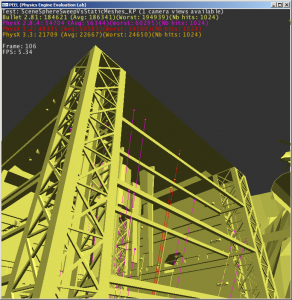

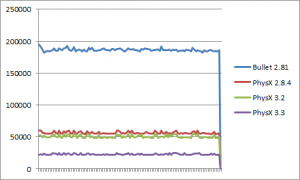

The first mesh scene (“SceneBoxSweepVsStaticMeshes_Archipelago”) performs 1K vertical box sweeps against the mesh level we previously used.

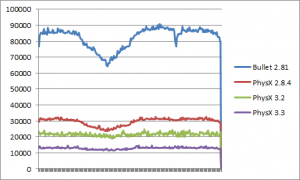

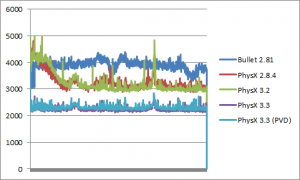

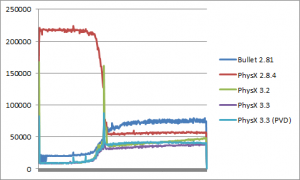

There are no terribly big surprises here. All engines report the same number of hits. Each PhysX version is faster than the one before. They all perform adequately.

PhysX 3.3 is only about 2X faster than PhysX 2.8.4, so things were generally ok there.

Bullet suffers a bit, being a bit more than 6X slower than PhysX 3.3 on average.

—-

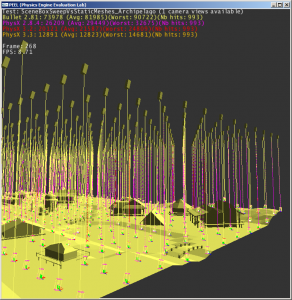

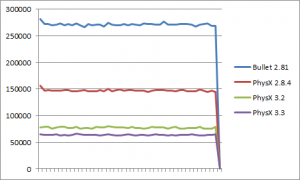

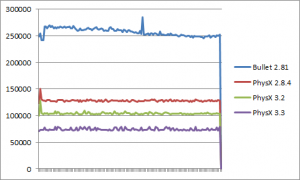

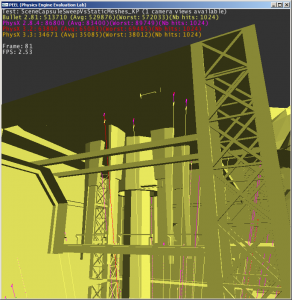

For the next tests we switch back to the Konoko Payne level, which is much more complex and thus, maybe, closer to what a modern game would have to deal with. We start with sphere-sweeps against it (“SceneSphereSweepVsStaticMeshes_KP”).

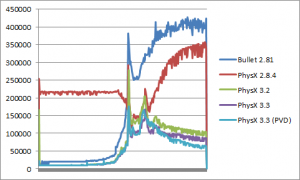

Results are quite similar to what we got for the Archipelago scene: PhysX gets faster with each new version, 3.3 is a bit more than 2X faster compared to 2.8.4.

Bullet suffers again, being now a bit more than 8X slower than PhysX 3.3 on average.

—-

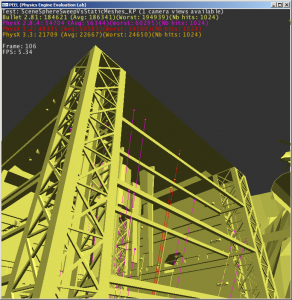

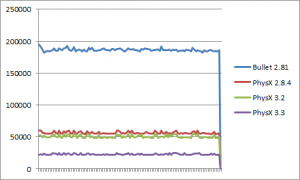

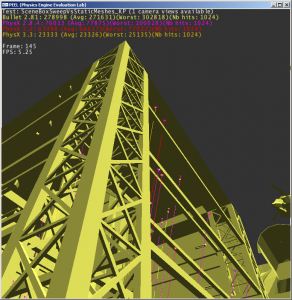

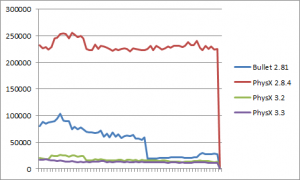

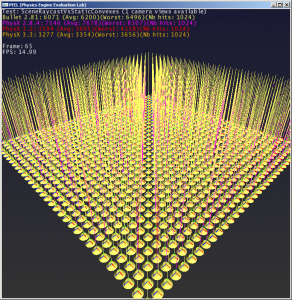

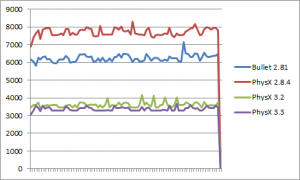

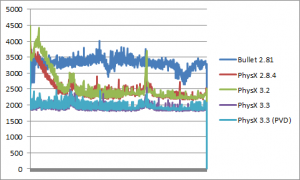

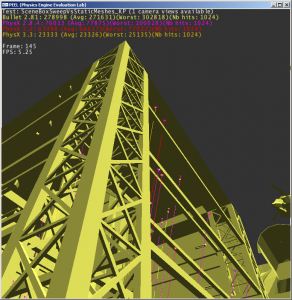

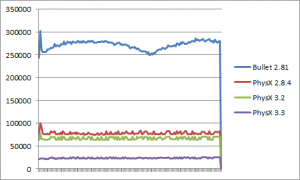

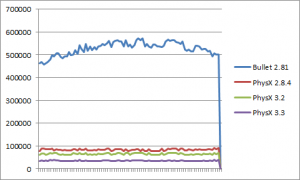

The same test using box-sweeps (“SceneBoxSweepVsStaticMeshes_KP”) reveals the same kind of results again.

This time PhysX 3.3 is about 3.3X faster than 2.8.4.

Remarkably, PhysX 3.3 is more than an order of magnitude faster than Bullet here (about 11.6X)!

—-

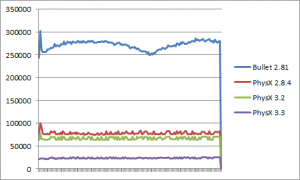

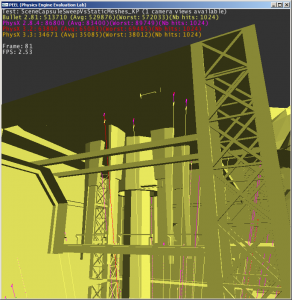

Finally, the same test using capsule-sweeps (“SceneCapsuleSweepVsStaticMeshes_KP”) confirms this trend.

PhysX 3.3 is a bit more than 2X faster than 2.8.4, once again.

As for Bullet, it is now about 15X slower than PhysX 3.3. I feel a bit sorry for Bullet but I think this proves my point: people who claim PhysX is not optimized for CPU should pay attention here.

—-

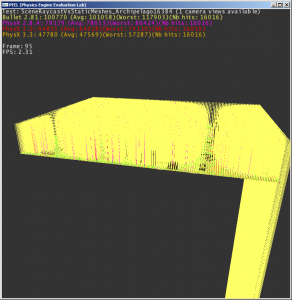

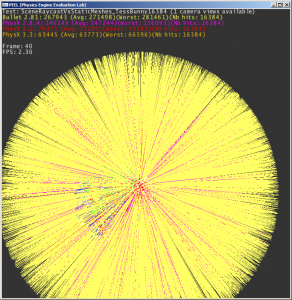

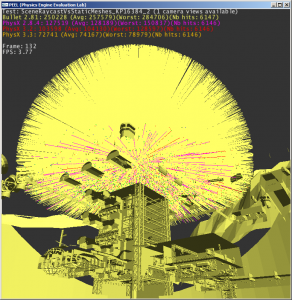

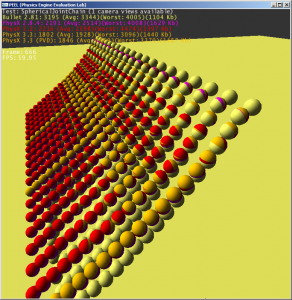

Now the KP scene, as you may remember from the raycasts section, has a lot of meshes in the scene but each mesh is kind of small. We will now test the opposite, the Bunny scene, which was a scene with just one highly-tessellated mesh.

And since we are here to stress test the engines, we are going to run several long radial sweeps against it.

And using large shapes to boot.

This is not supposed to be friendly. This is actually designed to break your engines.

So, what do we get?

—-

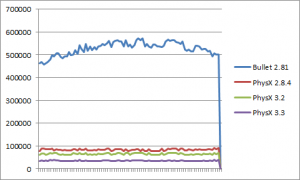

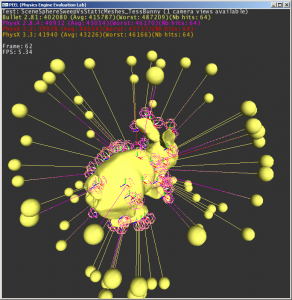

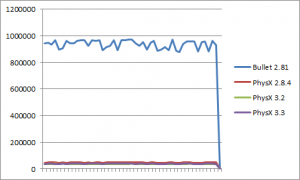

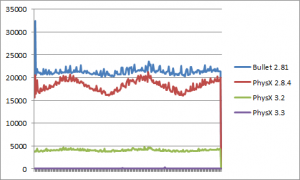

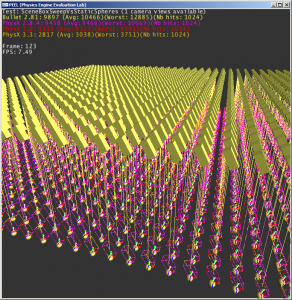

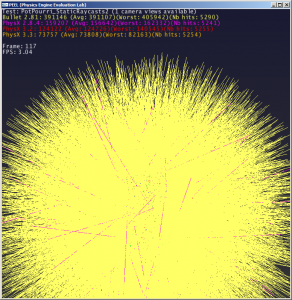

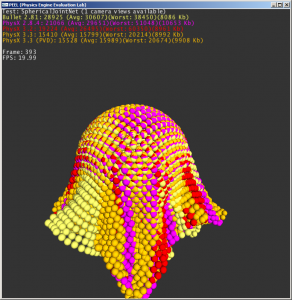

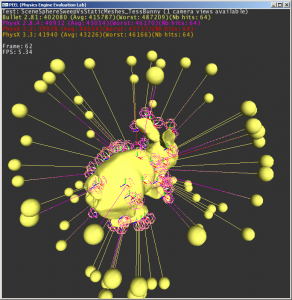

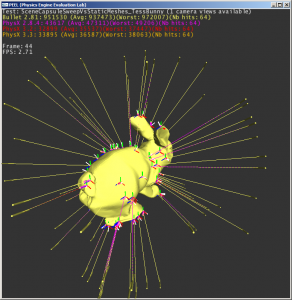

Let’s start with the spheres (“SceneSphereSweepVsStaticMeshes_TessBunny”).

Note that we only use 64 sweeps here, but it takes about the same time (or more) than performing 16K radial raycasts against the same mesh… Yup, such is the evil nature of this stress test.

It is so evil that for the first time, there really isn’t much of a difference between each PhysX versions. We didn’t progress much here.

Nonetheless, PhysX is an order of magnitude faster than Bullet here. Again, contrary to what naysayers may say, the CPU version of PhysX is actually very fast.

In fact, stay tuned.

—-

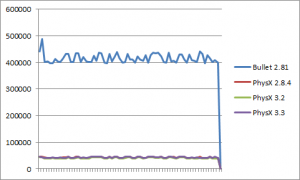

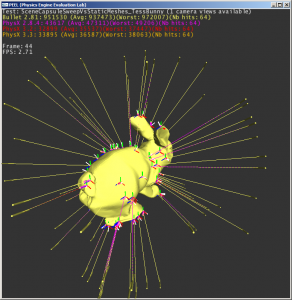

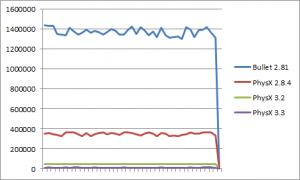

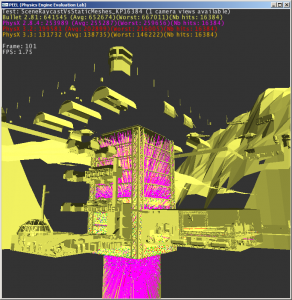

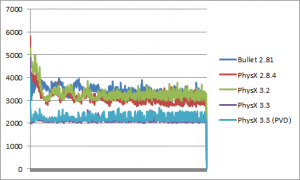

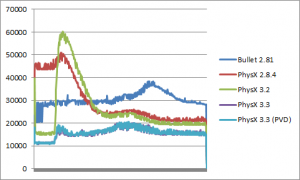

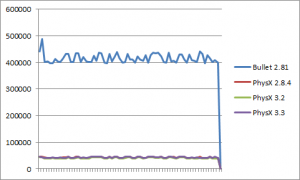

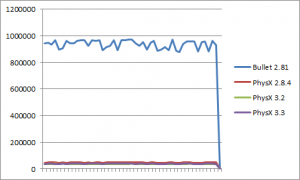

Switch to capsule sweeps (“SceneCapsuleSweepVsStaticMeshes_TessBunny”). Again, only 64 sweeps here.

There is virtually no difference between PhysX 3.2 and 3.3, but they are both measurably faster than 2.8.4. Not by much, but it’s better than no gain at all, as in the previous test.

Now the interesting figure, of course, is the performance ratio compared to Bullet. Yep, PhysX is about 25X faster here. I can’t explain it, and it’s not my job. I’m only providing a reality check for a few people here.

—-

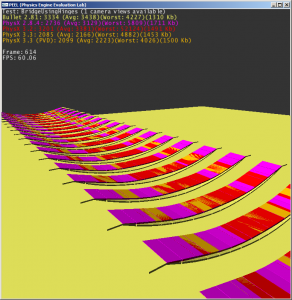

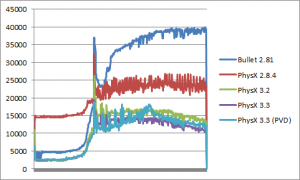

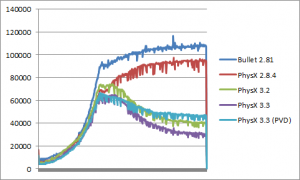

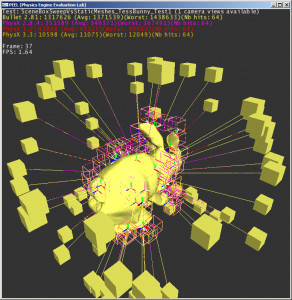

Ok, this is starting to be embarrassing for Bullet so let’s be fair and show that we also blew it in the past. Kind of.

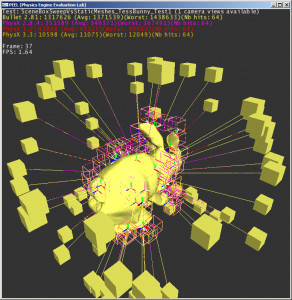

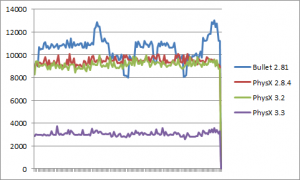

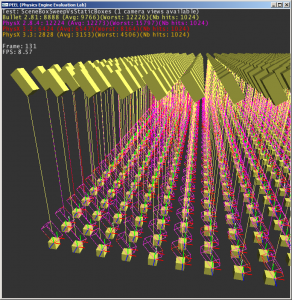

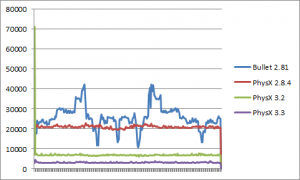

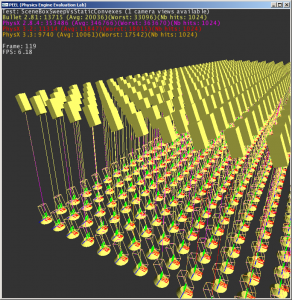

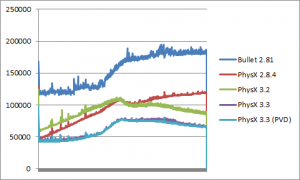

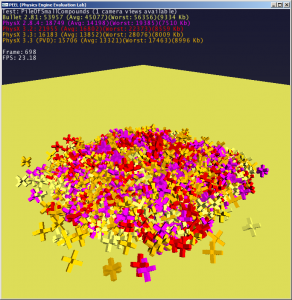

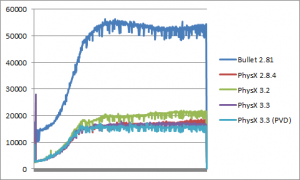

Switch to box sweeps (“SceneBoxSweepVsStaticMeshes_TessBunny_Test1”). And use fairly large boxes because it’s more fun. Again, we only need 64 innocent sweep tests to produce this massacre.

Look at that! Woah.

“Each PhysX version is faster than the one before”, hell yeah! PhysX 3.3 is about 4X faster than PhysX 3.2, and about 31X faster (!) than PhysX 2.8.4. Again, if you are still using 2.8.4, do yourself a favor and upgrade.

As for Bullet… what can I say? PhysX 3.3 is 123X faster than Bullet in this test. There. That’s 2 orders of magnitude. This is dedicated to all people still thinking that PhysX is “not optimized for the CPU”, or “crippled”, or any other nonsense.

—

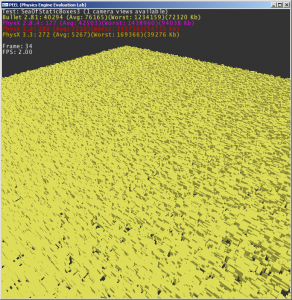

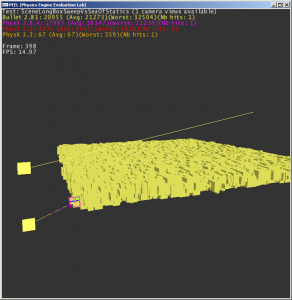

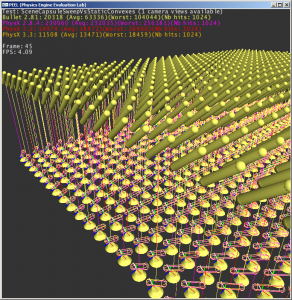

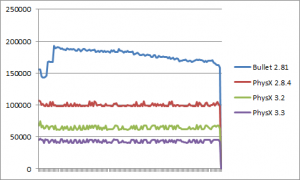

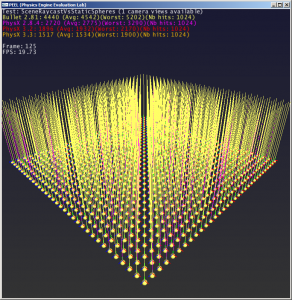

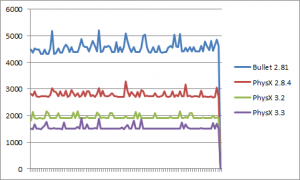

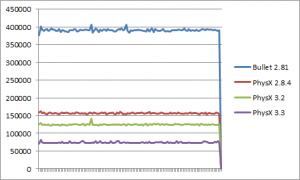

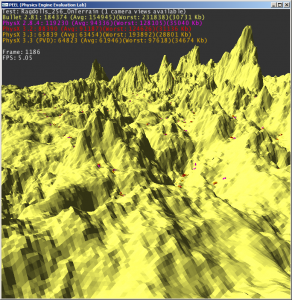

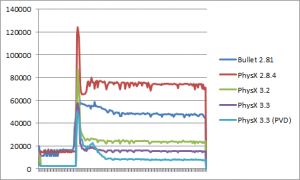

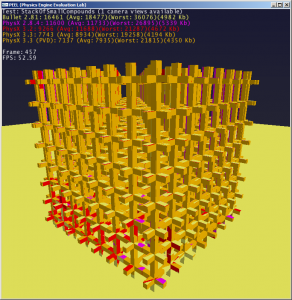

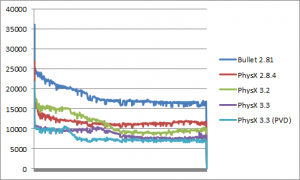

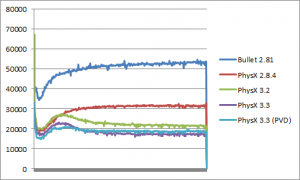

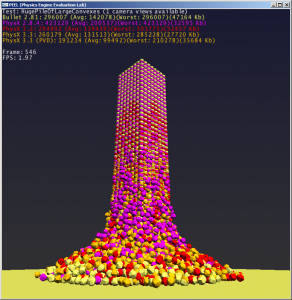

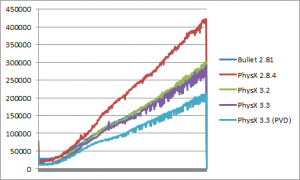

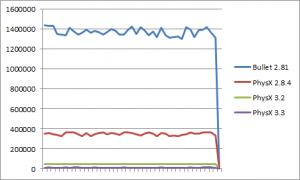

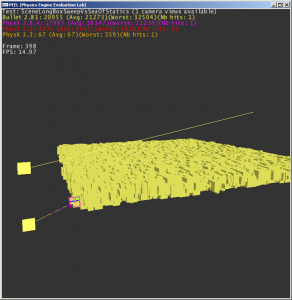

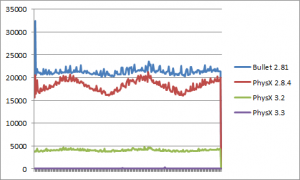

The final test (“SceneLongBoxSweepVsSeaOfStatics”) has a pretty explicit name. It’s just one box sweep. The sweep is very large, going from one side of the world to the other, in diagonal. The world is massive, made of thousands of statics. So that’s pretty much the worst case you can get.

The results are quite revealing. And yes, the numbers are correct.

For this single sweep test, PhysX 3.3 is:

- 60X faster than PhysX 3.2

- 270X faster than PhysX 2.8.4

- 317X faster than Bullet

Spectacular, isn’t it?