The evolution of PhysX (3/12) - Rigid bodies (convex stacks)

Saturday, May 11th, 2013

We continue with stacks, this time stacks of convex objects. This is where things start to become interesting. There are multiple things to be aware of here:

- Regular contact generation between boxes is a lot easier (and a lot cheaper) than contact generation between convexes. So PCM-based engines should take the lead here in theory.

- But performance depends a lot on the complexity of the convex objects as well.

- It also depends whether the engine uses a “contact cache” or not.

Overall it’s rather hard to predict. So let’s see what we get.

We have two scenes here, for “small” and “large” convexes. Small convexes have few vertices, large convexes have a lot of vertices. The number of vertices has a high impact on performance, especially for engines using SAT-based contact generation.

—-

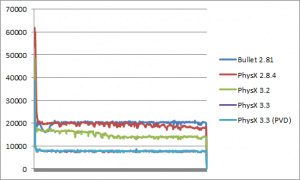

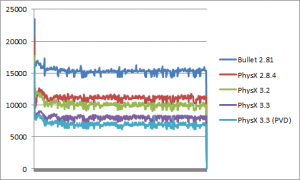

The first scene (“ConvexStack”) uses the small convexes. Each PhysX version is again a bit faster than the previous one, so that’s good, that’s what we want.

There doesn’t seem to be any speed difference between PCM and regular contact generation. In fact PCM has even a slightly worse worst case – maybe the overhead of managing the persistent manifolds. I suppose it shows that SAT-based contact generation is a viable algorithm for small convexes, which is something we knew intuitively.

Now, 3.3 is about 2X faster than 3.2 on average, even when they both use SAT. The perf difference here probably comes from two things: 3.3 uses extra optimizations like “internal objects” (this probably explains the better worst case) and it also uses a “contact cache” to avoid regenerating all contacts all the time (this probably explains the better average case).

As for 2.8.4 and Bullet, they’re of similar speed overall, and both are significantly slower than 3.3. We see that 2.8.4 has the worst worst case of all, which is probably due to the old SAT-based contact generation used there – it lacked a lot of the optimizations we introduced later.

As for box stacks the initial frame is a lot more expensive than subsequent frames. Contrary to box stacks though, I think a lot of it is due to the initial contact generation. Engines sometimes use temporal coherence to cache various data from one frame to the next, and that’s why the first contact generation pass is more expensive. It should be pretty clear on a scene like this, where all objects are generating their initial contacts in the same frame, and then they don’t move much anymore. This is not a very natural scenario (most games use “piles” of debris rather than “stacks” of debris), but those artificial scenes are handy to check your worst case.

—-

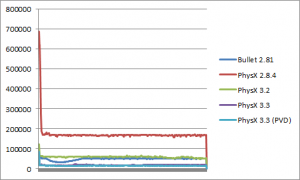

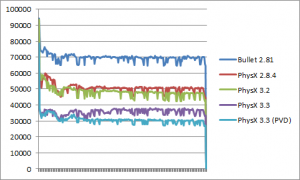

The second scene (“ConvexStack3”) uses large convexes. Now look at that! Very interesting stuff here.

Each PhysX version is again faster than the previous one, that’s good. But the differences are massive now, with PhysX 3.3 an order of magnitude faster than PhysX 2.8.4. And also almost 3X faster than 3.2. Well it’s nice to see that our efforts paid off.

In terms of PCM vs SAT, it seems pretty clear that PCM gives better performance for large convexes. We see this with PhysX 3.3, but also very clearly with Bullet. It is the first time so far that Bullet manages to be faster than PhysX. It is not really a big surprise: SAT is not a great algorithm for large convexes, we knew that.

On the other hand what we didn’t know is how much slower 2.8.4 could be, compared to the others. I think it is time to upgrade to PhysX 3.3 if you are using large convexes.

Another thing to mention is the memory usage for 3.2. We saw this trend before, and it seems to be worse in this scene: 3.2 is using more memory than it should. The issue has been fixed in 3.3 though.